Intellectual Fascia

What holds scientific discourses together?

Epistemic Status: Describing the general case suggested from my exposure to a finite set of specific events.

I am an academic in Computer Science, and I hope it will be a surprise to no one when I say that the vast majority of knowledge in papers depends on knowledge nowhere to be found in papers. I would say that across computer science this is less true than in many other fields, because many different communities require direct reproducibility of results (within some tolerance for different conditions) via code released by the authors of a paper. This is not nearly as much of a guarantee as it would sound like for three reasons:

(1) Even if you can run the code and get similar results, you don’t know what the code is actually doing, and often neither do the authors.

(2) What the evaluation metrics / scenario which you are evaluating X thing on actually mean in terms of, say, the efficiency difference between two methods is often not obvious unless you’re in the field. Benchmarks have to make simplifying assumptions to serve as barometers of some inherent property people care about which is probably not just one property and even more probably not well defined. When benchmarks are introduced they make some of these clear, completely refuse to mention most of them, and the authors are often unaware of the most important factors that inherently make a benchmark narrow.

(3) Even if the code works and you understand the benchmark, you usually don’t understand how the authors came up with the specific settings with which they used the code given the specific things they tested on. A lot of the time it turns out they tried a lot of things before they tried the thing they showed you, and they don’t like to talk about how hard it was to find them, how badly it worked in so many cases, etc.

So, when you come to get a PhD what do you spend most of your time doing? You spend it getting acculturated. Everyone talks about this, but they keep it vague because if you look at it too hard it’s easy to get really cynical. The place it comes out the nicest (and most presentably) is in group meetings when people discuss papers: a first year will ask a question that makes perfect sense if you believe papers at face value, but no one would even think to ask if they knew how the sausage is mad. For example, a first year might ask “Why didn’t they try method X on problem Y?” but everyone knows that all the benchmarks for Y are defunct and don’t really test Y, so the authors had to write their way around this issue.

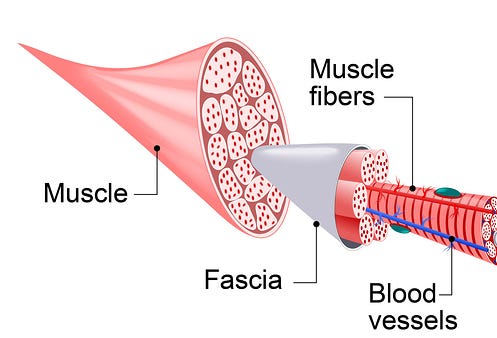

The process of acculturation to academia is the process of learning how to see and use the intellectual fascia that hold a community together. What are all the different properties that are assumed about all the different objects? And what about their different relationships? What do people mean when they say certain kinds of things about them? What are places where people have a different “tint” to their statements about different sets of things and how can you compensate for that “tint”?

The process of acculturation never stops, either. One of the reasons it is impossible to write good textbooks that are up to date with modern practice, is that modern practice is just a bunch of fashionable methods and problems without hindsight to tell you what stuck. Most textbooks are mostly justifying what stuck under a narrativization that feels satisfying. If you tried actually thinking about a method of doing history in any kind of verifiable way for these fields, you would look at the papers published around the times that the textbooks are talking about and feel very, very uncomfortable. People who do look at the history of disciplines are aware of how messy it is, but for most of them they are rewarded—both intrinsically and extrinsically—to come up with their own pet theory, one that can be compressed down to an elevator pitch.

Even though people stop seeing some of the basic levels of this intellectual fascia, all serious researchers are aware of the layers that are still gooey enough to be subject to change. Most of us are gossiping about it, griping out this paper’s influence, or wondering whether X will take off to our colleagues. This is not idle chit-chat—this is the internal network of citations and relevance. In my subfield, papers are published extremely often. There are 2 top tier conferences a year in my subfield, plenty of highly regarded related conferences and second-tier conferences to go to in a pinch, but even this cannot keep up with our output. The main place people put their work first is Arxiv. When I first came to grad school, I would try to read the abstract of every paper that was posted on Arxiv in my subfield that day—giving a quick skim and putting aside anything that looked interesting. It would generally take me around an hour, but it was a great help in understanding the space. Today this would easily fill up my work day, and probably push me to find time in the evening for more.

So how do people manage understanding the space of the field in practice? There are specially designed tools, like Arxiv Sanity Preserver, which do get a decent amount of traffic. But in practice the answer is social—the first line of reconnaissance is Academic Twitter, with the hope that really important things will bubble up as your colleagues discover them and post about them.

But all the truly public spaces like these are just lettuce on your hamburger—they don’t give you even one of the five to nine servings of papers and github repositories per day that the USDA (United States Department of Academia) recommends. Instead you have your lab meetings, where people present papers that maybe are only a few weeks behind, but perhaps the senior students and professors will name drop the good stuff that’s just coming out. Then you have your slack channels—hopefully you’re part of a few good ones where people will link drop Arxiv papers they just happened to see mentioned in some search result or by a friend of theirs. This might get you up to three servings of contemporary knowledge, but the real line is direct people.

If you are trying to “keep in the know” then you talk to people and you talk to people a lot. Talking to people who know people who know people who know a paper just came out is how people know about papers. But knowing about papers isn’t enough: it’s the changing configuration of the fascia that matter. When you have a good relationship with someone in academia, you begin to talk about what does and doesn’t work “in practice”—i.e. who was really lying about what, and even more important who knows they’re lying and how much they know they’re lying, because not everyone is equally good at understanding what’s really going on. A lot of the laundering of false information is just a little bit of motivated reasoning by someone who would have to accept some crucial facts about the current situation of the field to even begin doing the kind of verification required to get more reliable results.

The most important thing, by far, is discussing what property of the current situation allowed X to make Y claim. Sometimes the answer is technical: they took advantage of some condition—whether it be a new technique, a specific aspect of a problem, or a defect of a benchmark. Sometimes the answer is social: X was able to claim Y with less evidence today than would have been required yesterday, because result Z makes Y seem almost like a forgone conclusion. But all of these little bits of info and developments add up to something more: the way the field as a whole is thinking. It does not think in one direction, but instead is lots of little subnetworks of the papers and results and code and people that people “see” as grouping together, and thus coordinate around. It is verbal citations that actually constitute motor force in moving the field forward, as they are the ones that rewire how people in the conversation think about their situation and thus their next experiment.

These are the citations and interpretations that matter most, the ones that happen behind closed doors. They are subject to just as much strategic interaction as the ones that happen in public, but their audience is not diffuse—it is another individual, who usually has some actual research interest regardless of what kind of politicking they may also be involved in. If you are lucky one or more of your colleagues could be a “write ’em up” kind of person—they will write brief PDFs that clean-up some of these thoughts and these PDFs will be handed around like gold, because they are the most reusable currency of the backchannel. The PDFs, however, are a very dusty mirror, because they must be presentable to the public when inevitably they are passed around more than the original author intended.

Yet it is exactly because of the methods of this backchannel that it is ephemeral—and my suspicion is that rereading old research papers is so alienating exactly because the flesh has decayed away and all we have left is the fossil.

When I was an undergrad, applying to Ph.D programs, I constructed a few "citational phylogenetic trees" to try and understand the state of research of this and that professor to see which I would want to work with and what their differences were. This turned out to be fairly fruitles: I could barely understand the math at the roots; tracing to the present meant that the papers either diverged 60 years ago and had almost nothing in common or diverged yesterday; and only one school wanted me and I was assigned to someone who had space.

If I'm understanding this correctly (I dropped out before doing any real research), this was an even more fruitless exercise than I previously imagined. Not only is a citational phylogenetic tree kind of hard to construct even if you look at many professors and their papers, but the really important information will not be found in citations.